What if every pilot, air traffic controller, and airport operator had a digital assistant?

Started in June 2023, JARVIS is a SESAR Joint Undertaking project, prototyping and validating three digital assistants for pilots, ATCOs and airports with a forward-thinking approach: how can these prototypes be deployed in the real world?

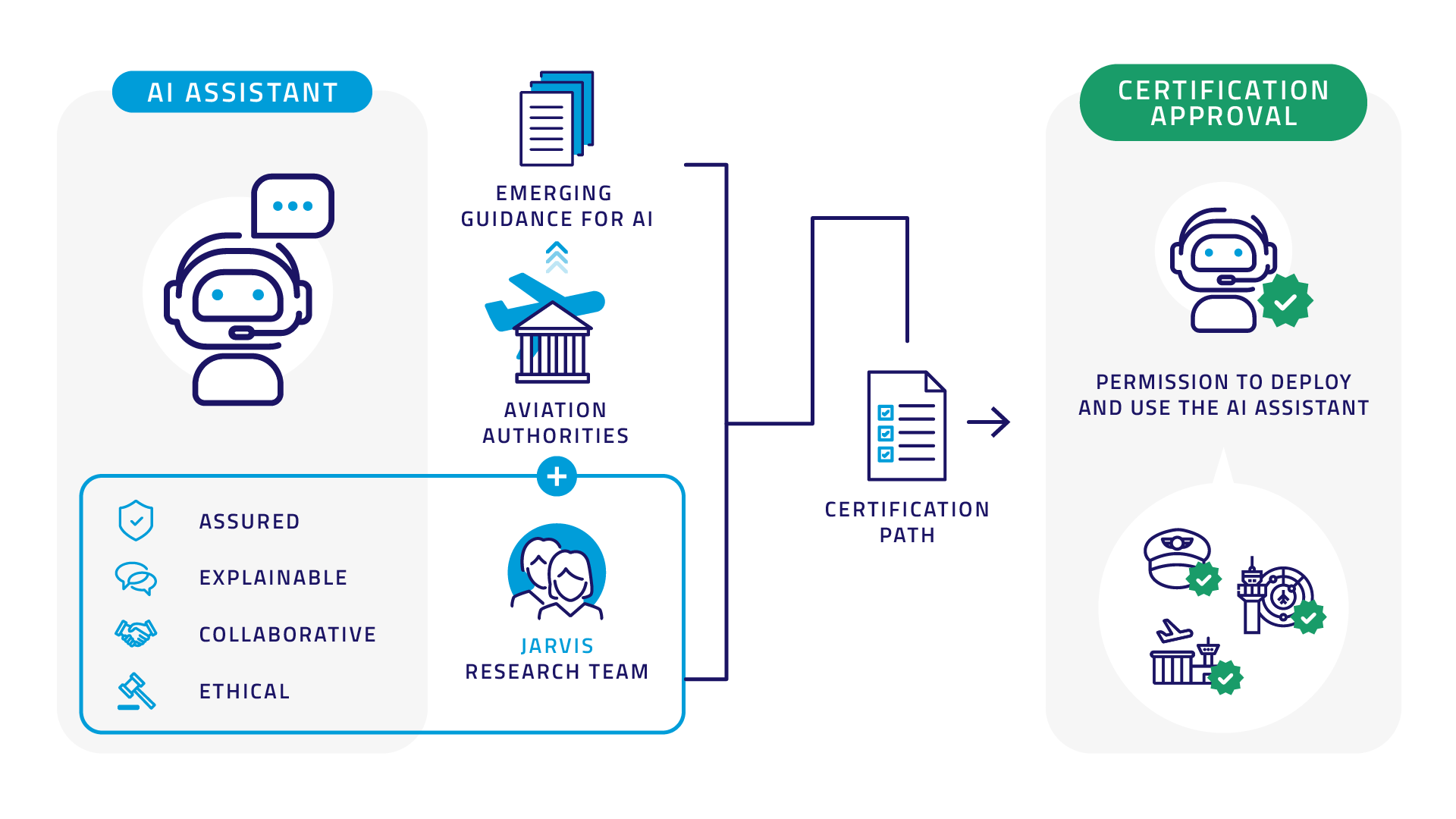

When we want to introduce digital assistants into operational scenarios, there are two considerations to be made:

1. Some of the assistant’s functions are safety-critical and therefore must be certified.

2. JARVIS uses Artificial Intelligence (AI) technologies. Certification guidance for AI is currently emerging yet not finalized (key examples include EASA AI Concept Paper [1] and the upcoming ED-324 standard from EUROCAE WG-114 / SAE G-34 [2]).

Building a certification path for technologies that are new to aviation (such as AI) is critical for technology viability and successful deployments, and this is where JARVIS steps in, becoming a core part of the process. The team has identified several high-level properties that enable future certification approvals of AI assistants.

First, AI assistants must be assured, meaning that they must follow a development assurance process, just like traditional software and hardware. AI technologies introduce unique issues that have consequences for assurance that existing standards, such as DO-178C [3] or DO-254 [4], do not directly address. These issues include, but are not limited to, generalization and robustness of Machine Learning (ML) based models (including reinforcement learning), as well as determinism and timing of logic reasoning models, both used in JARVIS. Additionally, AI poses new system-level challenges, since AI models and constituents need to be integrated into larger (often existing) systems and platforms.

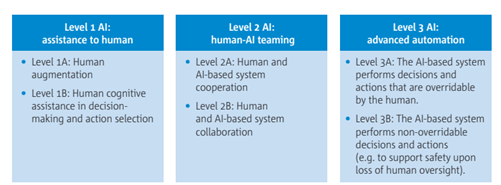

Second, AI assistants need to be explainable and collaborative. As automated systems are becoming more advanced, enabling complex human-machine interactions and collaboration, the concept of Human-AI Teaming (HAT) has emerged as a cornerstone for AI trustworthiness. HAT is essential for creating systems that efficiently help human users in achieving their goals. This concept is particularly relevant for highly automated AI-enabled systems, such as EASA levels 2B and higher, yet HAT principles and objectives are also important for Levels 1B/2A, which JARVIS focuses on. The operational explainability of a system’s actions and decisions, interface design and interaction modalities are all important ingredients for achieving safe and certifiable HAT.

EASA AI Roadmap levels of automation

Finally, AI ethics is also a critical building block for AI trustworthiness. Ethical aspects for AI assistants that interact with human users range from privacy and non-discrimination to accountability and liability concerns. These topics must be carefully addressed, both to gain trust in the AI system (and prevent losing it), and to comply with the AI regulation (EU AI Act). For this, the JARVIS team has drafted an AI Ethics assessment approach, and it is currently evaluating it on several use cases.

Building a certification path requires alignment with the emerging aviation guidance. Given the unique challenges that AI presents, early and active engagement with aviation authorities and standardization committees is crucial for future approvals of AI. This is why the JARVIS project is collaborating directly with EASA, focusing on the aforementioned AI assistant properties. These properties are well aligned with EASA trustworthy AI building blocks, and the results gained from joint discussions together with the lessons learned from JARVIS validation exercises will inform future versions of EASA AI Roadmap and AI Concept Paper. This collaboration will help to shape the key aspects related to AI assurance, HAT and Ethics, paving the way for the safe and effective integration of AI in aviation.

References:

[1] European Union Aviation Safety Agency (EASA), “EASA Concept Paper Issue 2: Guidance for Level 1&2 Machine Learning Applications”, EASA, March 2024.

[2] SAE G34 / EUROCAE WG-114, “Recommended Practice for Development and Certification Approval of Aeronautical Products Implementing AI”. Draft ED-324/ARP6983 for EUROCAE Open Consultation, August 2025.

[3] RTCA DO-178C / EUROCAE ED-12C, “Software Considerations in Airborne Systems and Equipment Certification”, 2011.

[4] RTCA DO-254 / EUROCAE ED-80, “Design Assurance Guidance for Airborne Electronic Hardware”, 2000.