JARVIS is an EU-funded project developing three artificial intelligence based (AI-based) digital assistants (DAs) and several features to work alongside human counterparts, ensuring safer and more efficient operations. Throughout the course of the project, the DAs team are collaborating with the Foundational AI team which focuses on researching three key foundational AI capabilities: AI design assurance, human–AI teaming, and dataset creation.

To facilitate smooth and effective collaboration, the Foundational AI team periodically organizes engagement activities in the form of workshops, separate meetings or surveys with the DA research teams to regularly collect emerging lessons learned and best practices, including specific design decisions, customisations, and AI methods. Similarly, the Foundational AI team periodically provides guidance to all solutions, sharing lessons learned as well as emerging trends and guidelines from the scientific community.

This ongoing, two-way exchange enhances both the research activities on Foundational AI topics and the and the design of the assistants, ensuring that the project outcomes are robust, thoroughly informed, and truly reflective of the expertise of the partners involved.

Dataset creation

The dataset creation challenge addresses data collection issues that are common in the aviation domain and aims to provide key takeaways. For instance, the decisions taken by human operators like air traffic controllers provide valuable data for machine learning. In this case, balancing the need for relevant context data while respecting general data protection rights and avoiding profiling can prove to be a significant challenge.

Moreover, today's air traffic management operations achieve a high level of safety with rare instances of safety critical events. This superior performance of aviation – compared to other modes of transportation – provides a challenge when it comes to creating comprehensive data sets containing sufficient information about safety critical events.

JARVIS will provide lessons learned and insights into how these challenges were tackled during the development of the digital assistants.

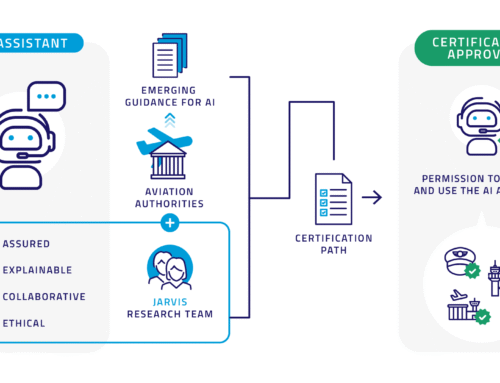

AI Design Assurance

AI design assurance is a structured process aimed at ensuring the trustworthiness of AI systems throughout their lifecycle. The trustworthiness of AI refers to the degree to which an AI system can be relied upon to perform its intended functions accurately, reliably, safely, and ethically. Ensuring the trustworthiness of AI is essential for fostering public acceptance, regulatory compliance, and the responsible adoption of AI technology across various domains, including aviation.

The JARVIS digital assistants will utilize a range of AI methods, including machine learning as well as reinforcement learning and machine reasoning. The generalization of these approaches, their robustness, as well as providing explainability are considered AI assurance challenges particularly relevant within JARVS.

The digital assistants developed within JARVIS will tackle these challenges and evaluate means of compliance proposed in the literature and by EASA. Using this approach, JARVIS will provide lessons learned on how different AI methodologies relate to the challenges, how assurance can be achieved and evaluated.

Human AI Teaming

Human operators in air traffic management, like airport managers, air traffic controllers and pilots, should be supported and work seamlessly with the JARVIS digital assistants. As observed in human-human-teams, a team's performance depends on the quality of the teamwork processes. Therefore, the AI challenge in human-AI teaming centres on how digital assistants should be designed to function as good team members that are able to cooperate or even collaborate with humans.

Compared to classical automation, AI is less predictable, thus decisions regarding the AI methods and interface design should consider how to create an AI that is transparent and quickly interpretable for the human operator. The interface design should prevent cognitive overload by presenting only the relevant information necessary to achieve operational explainability. Additionally, the interaction between the human and AI should be adapted to the state of the human operator.

JARVIS will analyse to what extent findings from human-human teaming can be transferred to the human-AI- teaming challenges. Furthermore, lessons learned will be provided on what is needed to enable cooperation within Human-AI teams, focusing on specific guidelines for Human-AI teaming interaction design.